While the intention of the digital media revolution to make the production of media content more simple and accessible to the public has been achieved in many ways, color management is not one of them. Cinema color management went from dealing with imagery captured on a select few film stocks to a landscape where every camera on the market is equipped with its very own ‘color science’ (although it would be more aptly named ‘color processing’ since there is no such thing as a proprietary science.) Naming aside, the complexity of these signal processing schema has made it extremely difficult for cinema colorists to manually adjust clips sourced from different camera models such that they can be cut together in sequence without viewer-jarring color differences. Luckily, the Academy Color Encoding System or ACES [1] has been in development for over a decade to combat this issue. However, the use of this system to homogenize between differing clips sourced from different cameras requires that the devices are fully characterized, while in many cases users do not have access to these profiles or the equipment necessary to profile a device, or are working with material for which the capture details are unknown, making tools for automatically homogenizing color rendering between any given pair of inputs extremely desirable.

The broadcast industry (within which the Admire project operates), until recently has not had the same issues as cinema by virtue of the fact that the broadcast imaging pipeline employs (more strictly) the color processing standards of the International Telecommunication Union (ITU) and the Society for Motion Picture and Television Engineers (SMPTE). However, the adaptations broadcasters have made during the COVID-19 pandemic to support input from a range of consumer devices has made color homogenization a more relevant problem for the broadcast industry, as inconsistent color rendering between correspondents can greatly degrade the quality of a program’s appearance. The same is true for the Admire project which similarly is working to facilitate greater at-home participation, requiring that its pipeline also accommodates common capture devices like mobile phones, webcams, etc.

At the Image Processing for Enhanced Cinematography Group at Universitat Pompeu Fabra, we have been working for a number of years on two different strategies for color homogenization. The first, called color stabilization [2], requires that there are common scene elements between a source and reference image. The method goes on to identify pixel locations which correspond to the same object between the two images, and optimizes the parameters of a simplified camera color processing pipeline to find the best match between all correspondence points. Within the last year, as part of the work for another European Research Council project called SAUCE, we explored the applicability of our method for the lightfield application, and presented our findings at the 2020 IEEE (virtual) Conference on Acoustics Speech and Signal Processing [3]. A video of our presentation can be found here.

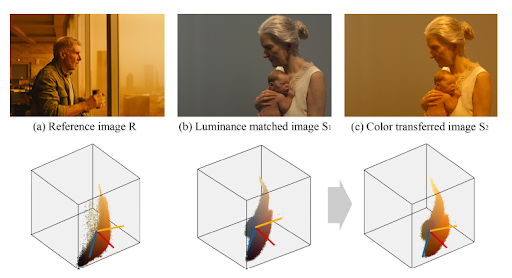

The second method, called style transfer [4], may be more directly useful for the Admire application. For this case, any arbitrary source and reference can be chosen without the requirement of common scene content. Without correspondances, the method works to transform the source and reference into a common display-referred (pertaining to the light coming from the display as opposed to the light coming from the scene) color representation, and performing various transforms to match the statistical properties of source and reference. Namely, the method matches the principle axes of a 3D cloud of image color points (Figure 1) in order to impart the color rendering style of the reference unto the source. The results, shown in Figure 2, demonstrate the effectiveness of this approach. Currently, the group is working on refining this method and testing others for the Admire application, employing live broadcast images from project partners TVR, Premiere Sports, and NRK, as well as sample user-submitted images (selfies) as test content with the goal of imparting the studio ‘look’ unto the at-home participant.

Figure 1. Geometric Representation of the color processing from Zabaleta [4]

Figure 2. Results from Zabaleta [4] for a collection of source (top) and reference (left) images.

References

[1] The Academy Color Encoding System (ACES), The Academy of Motion Picture Arts and Sciences (AMPAS) available online: https://acescentral.com/

[2] Rodríguez RGil, Vazquez-Corral J, Bertalmío M. 2018. Color matching images with unknown non-linear encodings. IEEE Transactions on Image Processing.

[3] O. V. Thanh, T. Canham, J. Vazquez-Corral, R. G. Rodríguez and M. Bertalmío, “Color Stabilization for Multi-Camera Light-Field Imaging,” ICASSP 2020 – 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2020, pp. 2148-2152, doi: 10.1109/ICASSP40776.2020.905

[4] Zabaleta I, Bertalmío M. 2018. In-camera, Photorealistic Style Transfer for On-set Automatic Grading. Society of Motion Picture & Television Engineers Annual Technical Conference & Exhibition.

Trevor Canham / UPF