One of the main tasks of the partner EPFL in the AdMiRe project, consists in comparing state of the art solutions for foreground extraction. In this post, we provide a brief summary of a typical comparison a foreground extractor, designed by EPFL in the framework of the AdMiRe project, with a recent foreground extraction tool that has been released as part of the NVIDIA Maxine, a GPU-accelerated SDK, allowing developers to build various applications requiring virtual background, such in video conferencing and live streaming (https://developer.nvidia.com/maxine). It is important to note that EPFL solution is a work in progress and future releases are expected with better performance.

The comparison is carried out along several criteria, namely, achievable frame rate in real-time, visual quality of the foreground extraction and the objective metric intersection over union (IOU) that provides a quantitative measure on how close the extracted foreground object is to its groundtruth.

The table below shows the achievable number of frames per second in real-time, achievable by each approach, then using the same platform. To estimate the above, the processing time of each approach was measured on several video sequences of different resolutions and their average reported.

NVIDIA’s solution is faster when compared to EPFL’s, as it is highly optimized using NVIDIA TensorRT for high-performance inference. Both EPFL and NVIDIA solutions can reach real time for resolutions up to 1080p with a frame rate of 25 frames per second for the former and 50 frames per second for the latter. None, can reach real-time performance for 4K resolution on the platform used.

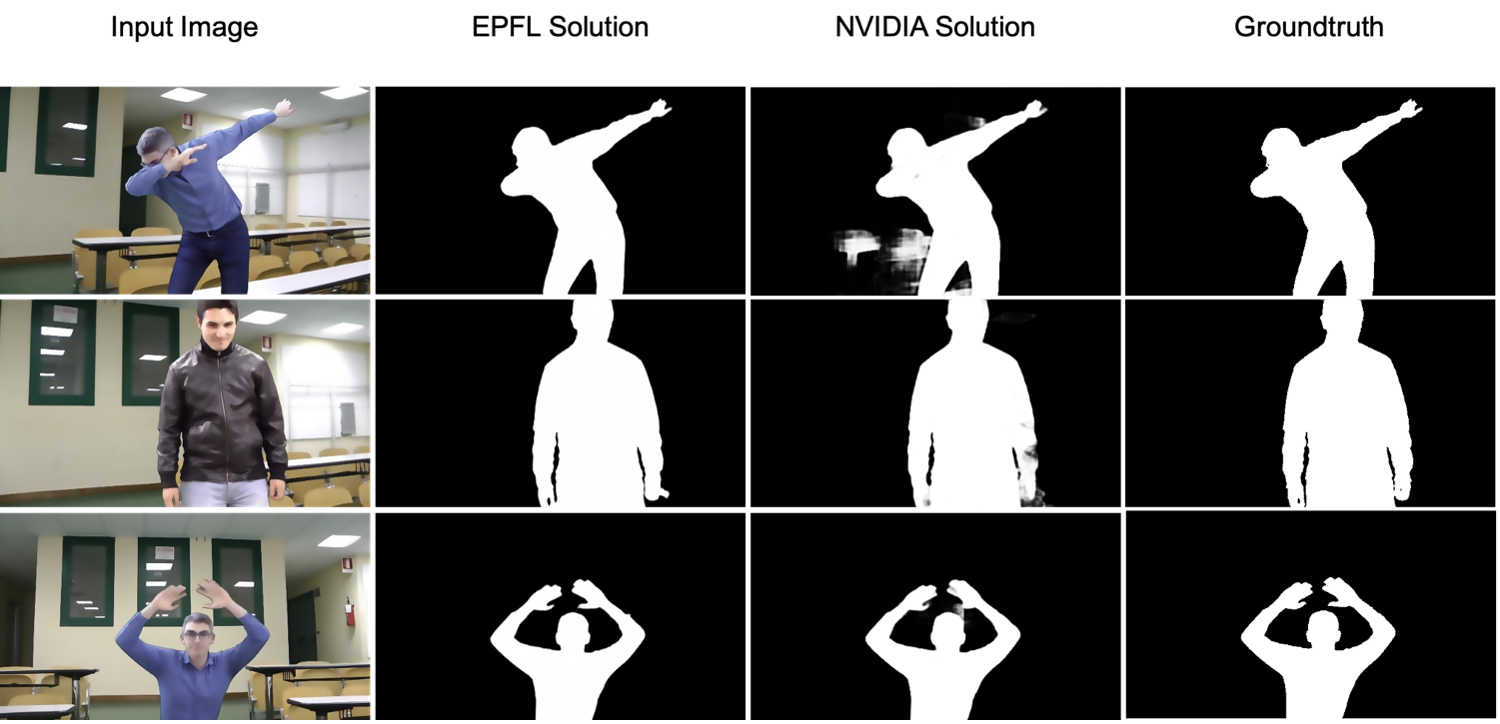

The quality of the foreground extraction largely depends on the temporal characteristics of the video and, in particular on the type of movement of the foreground object in the scene, which is a human subject in use cases under investigation in AdMiRe project. When the subject remains close to static or exhibits smooth movements, both approaches perform well in extracting the foreground, reaching good performance when compared to the groundtruth. However, in situations where the foreground subject performs brusque movements, both algorithms produce degraded foreground, with NVIDIA solution generally performing worse when compared to EPFL.

The above observation was further analyzed using a state-of-the-art dataset for foreground extraction known as “human segmentation dataset”. The table below provides a numerical metric based on IOU to quantify the performance of EPFL and NVIDIA quantitatively.

The illustration above depicts three typical scenes where the quality of EPFL and NVIDIA solutions can be visually compared to each other and that of the groundtruth, highlighting the typical degradations when the occur.

Post written by EPFL