Many have pondered, if all else stayed the same, what would be the outcome if just one thing changed – like if they had hair like Dolly Parton, or money like Warren Buffet. In many ways, computing has given us an opportunity to test these sorts of alterations in a contained, simulated environment. We edit our photos for social media, pruning details we do not want to represent us and subtly synthesizing wholly new characteristic which bring us closer to the reference of our imagined ideal. It is this curiosity to experience what X would be like if it achieved the characteristics of Y, that fuels the study of style transfer.

The original works in this area, such as Reinhard’s “Color Transfer between Images” [1] simply aimed to transfer basic statistical properties of a reference image (pertaining to its brightness and contrast) onto a source. In doing so, the authors achieved a new version of the source image which reflected some of the color properties of the reference, representing a combination of the effect of its capture medium, editing, and even content (Figure 1.) The field of study gained a boost in popularity with the seminal work of Gatys [3] “Image Style Transfer Using Convolution Neural Networks,” which leveraged recent developments in machine learning to allow for an impressive transfer of the artistic styles of renowned painters like van Gogh or Picasso (Figure 2) onto regular photographs.

Figure 1. Example using the method of [1] presented in [2]. The color palette of the middle image is applied to the left image to achieve the result shown on the right.

Figure 2. Examples from [3], where the method is used to convert the input image on the right, to the styles of van Gogh and Picasso.

Figure 2. Examples from [3], where the method is used to convert the input image on the right, to the styles of van Gogh and Picasso.

From here the field snowballed, producing hundreds of works under the “Neural Style Transfer” umbrella (by a very large majority funded by the National Key Research and Development Program of China) which aimed specifically to transfer these painting styles or make other appropriations such as the characteristics of individuals or species.

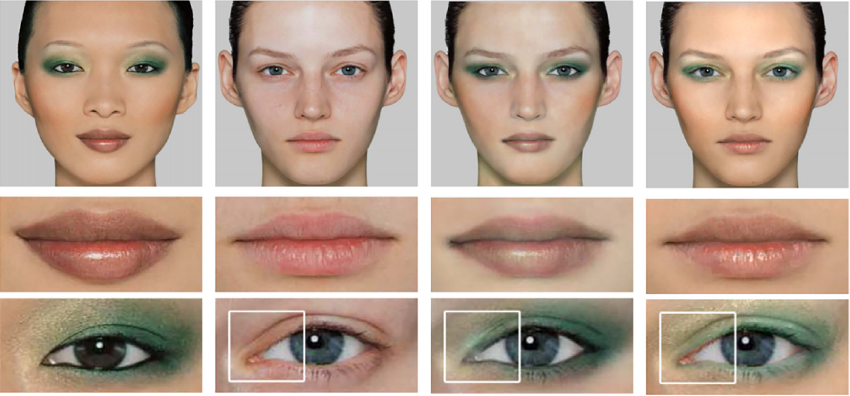

Examples of popular applications include transferring makeup from one individual to another, transferring dog breeds, and deep-fakes (Figures 3-5.) Currently, these methods are widely implemented as filters on social media platforms, and are being used for both entertaining and nefarious purposes, as hackers are successfully taking control of the public image of normal people to scam their friends and followers. Attacks are occurring commonly where hackers take control of social media accounts (or simply making new ones under the same name) and make convincing deep-fake videos where the victims appear to be personally vouching for fraudulent cryptocurrency or appearing in homemade pornography.

Figure 3. Examples from [4], where the content images of various big cats are matched to style images of small cats.

Figure 4. Example from [5]. From left to right, the makeup style is applied to the source, using two different methods [6] and [7].

Figure 5. Examples of semantic modifications to an input image from a review on deep-fake generation and detection [8].

Meanwhile, with all this excitement going on, methods for transferring simple photographic color grading styles fell to the wayside in spite of their ever-present utility for the film making and photographic communities. Among them, the AdMiRe project presented a need for a fast, automatic, and reliable method for homogenizing user submitted mobile phone videos with higher quality studio broadcasts. Now the reader may be thinking, if a Neural Style Transfer method could be used to turn your friend into a cryptocurrency sage, couldn’t it easily be tuned down to accomplish these simpler tasks?

The unfortunate answer is no, for a number of reasons. First and foremost, these methods need to be specially trained for the very specific type of transfer they accomplish. So, for example, if a method is trained to make dogs look like foxes, it likely will not succeed in making cats look like tigers. While the trained instances work quite smoothly on social media filters, their training is generally quite resource intensive with some methods recommending the use of 50+ Graphics Processing Units. Even after being trained, many methods require input images to be segmented and semantically labelled (meaning each object has to be outlined and identified) which can be difficult to accomplish. Finally, these methods are black boxes and thus cannot be tuned in any meaningful way, as they have no human readable parameters, making them bad tools outside of a consumer targeted automatic functionality. In addition to highlighting these problems, a recent review by Jing [9] indicates that over 90% of the methods in the field are not advertised to produce photorealistic results. Furthermore, employing the most popular recent style transfer methods (Li [10] and Luan [11]) that do advertise this capability on unfamiliar images produces results with artifacts which fall below the quality standards of the filmmaking and photographic communities (figures 6 and 7).

Figure 6. Example presented by the authors of [10], where blotching artifacts are present.

Figure 7. From left to right: Source image, reference image, our result, and the result of [11] demonstrating clear artifacts.

In comparison with the hundreds of Neural Style Transfer methods, relatively few works have been published in recent years which accomplish Style Transfer by any other means. Among them, many use the same transfer mechanism of Reinhard, but simply include additional pre-processing steps which isolate related image content in the source and reference to make more targeted color transfers (i.e. the color of foliage is to be applied to other foliage, etc.) During the course of the Admire project, we developed a method which directly extends the mechanism of Reinhard by transferring additional statistical moments and texture characteristics (Figure 8). This method is fast, tunable, produces minimal artifacts, and requires no training. There have been several other notable methods presented in the recent years, including the L2 divergence method of Grogan [12], the method of Zabaleta [13], and Photoshop’s “Match Image” filter. Moving forward, we will be launching an online validation experiment which compares our results against these methods. You can participate in the experiment using the link below, for as long as trials are being conducted: https://run.pavlovia.org/TrevorCanham/decoupled_style_transfer

Figure 8. On the top row the source and reference images are shown. On the bottom row, our method (left) is compared to the Reinhard [1] (right.) One can observe that the properties of the source image are significantly better represented in our result, exemplifying the benefit of transferring additional statistical properties. Source provided by European Southern Observatory, reference provided by John Purvis. All images uploaded to Flickr and licensed under CC-BY 2.0.

References

[1] Reinhard, E., Stark, M., Shirley, P., et al.: ‘Photographic tone reproduction for

digital images’, ACM Trans. Graph. (Proc. ACM SIGGRAPH 2002), 2002,

21, (3), pp. 267–276

[2] Pitié, F. ‘Advances in colour transfer’, IET Comput. Vis., 2020, Vol. 14 Iss. 6, pp. 304-322

[3] L. A. Gatys, A. S. Ecker, and M. Bethge, ´A neural algorithm of

artistic style´, 2015. [Online]. Available: https://arxiv.org/abs/1508.06576

[4] M. Liu, X. Huang, A. Mallya, T. Karras, T. Aila, J. Lehtinen, J. Kautz, ‘Few-Shot Unsupervised Image-to-Image Translation,’ 2019 International Conference on Computer Vision

[5] X. Ma, F. Zhang , H. Wei , L. Xu, ‘Deep learning method for makeup style transfer: A survey.’ Cognitive Robotics 1 (2021) 182–187

[6] D. Tong , CK. Tang , MS. Brown , et al. , ‘Example-based cosmetic transfer’ [C], IEEE Computer Society, 2007 .

[7] G. Dong , T. Sim , “Digital face makeup by example” [C], Computer Vision and Pattern Recognition, CVPR 2009, IEEE, 2009 .

[8] M. Masood, M. Nawaz, K. Mahmood Malik, A. Javed, A. Irtaza. ‘Deepfakes Generation and Detection: State-of-the-art, open challenges, countermeasures, and way forward’, CoRR abs/2103.00484 (2021)

[9] Y. Jing, Y. Yang, Z. Feng, J. Ye, Y. Yu, ‘Neural style transfer: A review’. IEEE TRANSACTIONS ON VISUALIZATION AND COMPUTER GRAPHICS November 2020

[10] Y. Li, M.-Y. Liu, X. Li, M.-H. Yang, and J. Kautz, “A closed-form solution to photorealistic image stylization,” in Proc. Eur. Conf. Comput. Vis., 2018, pp. 468–483.

[11] F. Luan, S. Paris, E. Shechtman, and K. Bala, “Deep photo style transfer,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2017, pp. 6997–7005.

[12] M. Grogan, R. Dahyot, ‘Robust registration of Gaussian mixtures for colour

transfer’. arXiv:1705.06091 [cs], arXiv: 1705.06091, 2017

[13] I. Zabaleta, M. Bertalmío, ‘Photorealistic style transfer for video’. Sig. Process. Image Commun. 95, 116240 (2021)